Summary

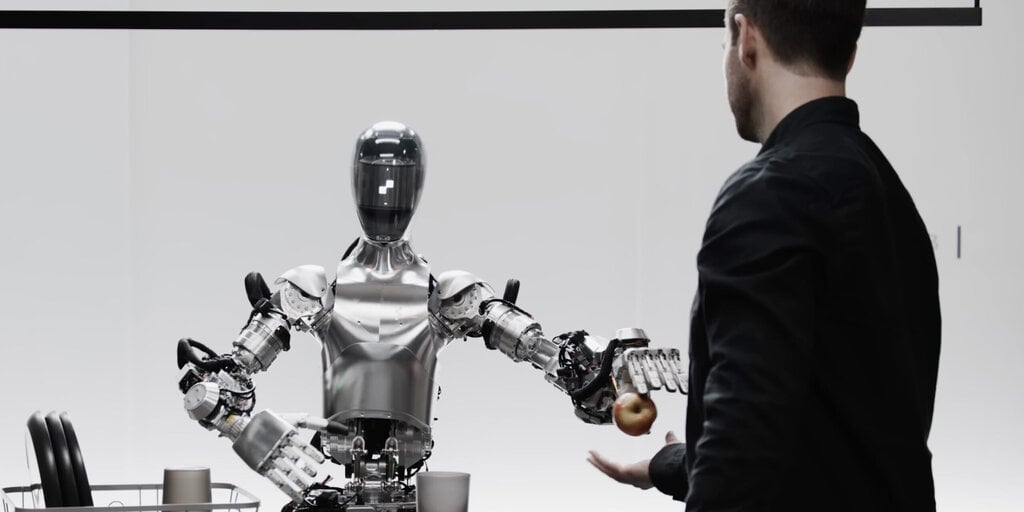

Robotics developer Figure showcased its first humanoid robot engaging in real-time conversations using generative AI from OpenAI. The robot can understand, react, and multitask based on human interactions. Figure 01 can describe its visual experience, plan future actions, reflect on memory, and explain reasoning verbally. The robot’s behavior is learned and not controlled remotely, using a multimodal model trained by OpenAI. The debut sparked excitement on Twitter, with many impressed by the robot’s capabilities. Figure 01’s impactful debut comes as policymakers and global leaders grapple with the integration of AI tools into mainstream society. Lynch provided technical details about the neural network visuomotor transformer policies driving Figure 01’s actions. The development of AI-powered humanoid robots like Figure 01 could have implications for space exploration and other industries.

Key Points

1. Robotics developer Figure showcased its first humanoid robot engaging in real-time conversations using generative AI from OpenAI.

2. The alliance with OpenAI enables Figure to incorporate high-level visual and language intelligence into its robots, allowing for fast, low-level, dexterous actions.

3. Figure 01, the humanoid robot, demonstrated its ability to interact with humans, identify objects, multitask, and engage in conversations, showcasing advanced AI capabilities.